This is something I did for a course I’ve been taking, but as I couldn’t find much information thought it might be helpful to document it here. Let me know if there’s anything you think I’ve missed!

Overview

The internet is a rich source of dynamic data, and is increasingly used in our applications. Many popular websites, such as Twitter, Facebook, Foursquare and Google (various services, including Maps and Search) provide APIs that can be used by developers to integrate these services into applications by sending requests over HTTP.

Testing code that depends on remote APIs has many of the issues of database testing, such as:

- Large amounts of data

- Data-centric application

- Tests take a long time to run

But additionally has some new issues, mostly arising as a result of lack of control of, and the remote nature of the API. Many of these issues have solutions, but they do not necessarily rely on testing techniques, so much as on design issues; when relying on a remote API it is hard to depend on simple unit tests the way we might in standalone application. Ultimately, the answer lies in writing testable code [1].

Testing Issues

Data is Unpredictable

Problem

When we build on top of an external API, we are often using is as a source of data to drive our application, however we cannot set up a data element and populate it with expected values, which is what we can do when testing applications using databases. Google Maps is the most popular API, and we can use that, for example, to transform coordinates into a location or vice versa, or to display positions on a map, get directions etc. We can use the Google search API to get the results of search queries. We can use the Facebook API to get details about our friends. And we can use the Twitter search API to get real-time commentary on current events.

The first case, the Maps API, the data (aside from mistakes) is fairly static. If I’m testing the coordinates for the University of Ottawa at 550 Cumberland, I can reasonably expect them to be the same, tomorrow, and even next month.

The second two cases, getting some information about Facebook friends (e.g. a list of friends, or their birthday) and using the Google search API change over time. Some of the results may well be different tomorrow, although many will likely be the same.

The final example, Twitter search, is dynamic and real time. The same request 30 minutes later (or less for extremely popular words or phrases) may generate completely different information. At a minimum, the search API only goes back 10 days, so even for the most uncommon term the results will change.

When we cannot rely on data being the same testing becomes more difficult. We cannot set up a search object with some specific expected data and use that as a test example. We can set up a Twitter profile, or a Facebook profile with some expected data but these are very small parts of the power of remote data accessible by API call and as such have limited utility. In the instance of the Facebook Profile, for example, unless we do not have any friends, we cannot rely on the data returned for a list of friends being unchanged, as it is a relationship that the other party can end without the users consent. The same is true for a follower of a twitter account; we have absolutely no control over them, and a large number of spam bots (who follow in the hope of being followed back, and unfollow if they aren’t) can make the follower list fairly dynamic.

Resolution

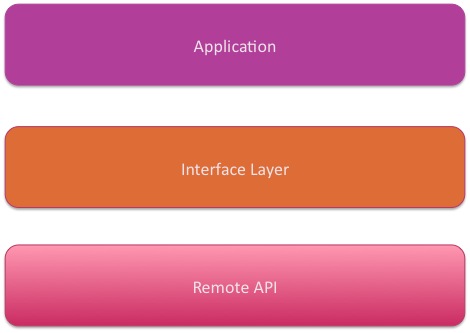

As a result of the unpredictability of data, we have to rely heavily on mock objects. There is (as yet) no equivalent of a controlled database that we can populate with data and verify for an API. Thus, the application should be architected for easier mocking, specifically with an interface between the API and the application, as shown in Figure 1.

Mocking data objects is time consuming and not without difficulties. Careful consideration should be given as to which objects should be “newable” and which should not be [2][3].

Confirming Data is Correctly Parsed

Problem

The Twitter search API returns data in either a JSON or ATOM format. It is likely that we need out data in a different format than that, and our SUT may well consist of code to parse the returned data in order to pick out some bits that are important to us. Even if we are using a library like http://www.json.org/java/ (converts JSON into Java objects) that we trust works well (following the practise of testing only our own code) this is another potential testing issue because we may be expecting large amounts of data that it will be difficult and time consuming to mock.

Resolution

A tool designed to facilitate the use of matchers in combined with unit testing and mock objects is Hamcrest [4]. From [5],

“Hamcrest can also be used with mock objects frameworks by using adaptors to bridge from the mock objects framework’s concept of a matcher to a Hamcrest matcher. For example, JMock 1’s constraints are Hamcrest’s matchers. Hamcrest provides a JMock 1 adaptor to allow you to use Hamcrest matchers in your JMock 1 tests. JMock 2 doesn’t need such an adaptor layer since it is designed to use Hamcrest as its matching library. Hamcrest also provides adaptors for EasyMock 2.”

Hamcrest allows us to be much more open and flexible in out matching, and has utility where we don’t know exactly what our data is but can make some observations about it.

Service Availability

Problem

Many online APIs have rate limitations, in terms of the number of calls we can make to them in a given time period. This makes it difficult to run our tests as frequently as advocated by the Extreme Programming (XP) paradigm [6]. Further, at times the service may just be down due to high usage (for example after the death of Michael Jackson services all over the internet slowed noticeably [7]).

XP advocates test driven development. In brief, this means that when a developer is about to implement some new functionality they first write a test case. They then code just enough to pass that test and run the entire test suite. If all tests pass, they move on to writing another test. If not, they fix the code and run the tests again, repeating as necessary.

It is clear that this approach can lead to a developer running the entire test suite many times an hour. In addition, the development process may include the stipulation that commits should only be accepted if all tests pass (mandating the “don’t break the tree” principle).

This leads to two issues:

- We may have to modify our development process in order to run our tests less frequently.

- If tests fail, we do not know if it is that our code is wrong, or if the service is down.

Resolution

For 1., we may want to design our test suite in order to minimize the number of API calls, perhaps even saving some responses to use for multiple tests (rather than making a new call to the API each time). We will need to keep track of the number of API calls resulting from our test suites and the limitations of the service and determine the limits of how frequently we can run our test suite, accordingly.

For 2., this can be approached from both a design perspective and a testing perspective. From the design perspective, we need to design for graceful failure in the case of a non-responsive API (this can and should be tested using mock objects). From the testing perspective, we can instrument our test suite to indicate that tests are failing as a result of non-responsive API rather than bugs in the codebase. In JUnit, this would mean throwing an error rather than fail for these issues.

Changing APIs

Problem

External APIs have some similarities to libraries in that they are designed and maintained by other people. However, we cannot just download an external API and add it to our build path, as we can in the case of a library. Although it is good practise to give developers using your APIs notice in advance of any changes, online business models can be precarious or non-existent (take the sudden shut-down of tr.im [8]) and this may not always be possible. This is an issue that arises in other uses of APIs, as outlined in [9].

This issue means that our codebase – including our tests – may have to be changed to reflect a changing API. For instance, Twitter is moving increasingly to oAuth and some of their newer API calls are not available without oAuth.

Resolution

This is mostly an issue of testing documentation – we need to be clear what our expectations are from the API, so that if the API changes and the expectations are violated, it is easy for a subsequent developer to make the alterations to the tests and the code to reflect the updated API.

External Requests Mean Slow Tests

Problem

In XP Explained [6], it is proposed that a test suite should take no longer to run than it does to get a cup of coffee. If the test suite takes longer to run, then it is suggested that the test suite is optimized until it can be run in this kind of time frame (around 10 minutes). In [10] Google Testing Guru Miško Hevery advocates a time of 2s for the whole test suite, as this means that tests can be run on every save, not just every commit.

However, when depending on an external API run time is dependant on the speed of the internet connection, and the response time of the service. The test suite may take longer to run than desired with limited options for optimization.

Resolution

There is little that can be done about this; the number of API calls can be minimized, and the developer can invest in a faster connection, but the main limit on speed will be the response of the external API, and this is out of the developer’s control.

Dealing with Multiple APIs

Mashups are becoming increasingly popular; this is where two APIs are combined to create a new application on top of both of them. A popular mashup resource is Programmable Web [11].

Problem

If we are building an application using multiple APIs, we will need to interface between them – it is well known that the bugs often occur at interfaces, and thus testing will be increasingly important. There are numerous mashup building tools available, designed to make the creation of mashups easier for non-programmer users, however as noted in [12] these tools have limited support for testing and debugging.

Resolution

This issue means that the design best practises outlined elsewhere in this document are increasingly important – the more APIs we use, the more of our application is glue, which is hard to test. Well-designed interfaces for easier mocking of objects will be important, as will reduced dependencies.

Application Uses Subset of API

Problem

The API available has many options, but the application only uses one aspect of it – i.e. we are using the Twitter API, but interested only in real-time search, not user-information.

Resolution

This issue is addressed in [13], where the Façade and Adapter patterns [14] are advocated as a solution; the Façade is an interface with a simplified view of the remote API. This means that the API is easier to mock, as only those aspects that the application will use must be defined. This is an issue depending on what is being used to mock the objects – whilst EasyMock allows partial mocking, JMock does not [15]. A simpler API means simpler mocking, and simpler testing.

The interface should be the ideal interface for the application being built. Excess complexity in the API is left out, and this means that changing to a different API later will be easier.

Application Code is Mostly Glue

Problem

As mentioned above, the more APIs are a part of our application, the more of our application is “glue code” – code that connects what we get from the API, to what our application wants. I.e. we can use the Twitter API and a JSON parsing library, but it is likely our code requires custom Java objects – thus the glue code will be what turns a JSON object into the Object type that our application works with.

Resolution

In [16] one of the challenges for improving testing is the testing of glue code. When using a remote API, a significant part of the process will consist of gluing the API (which can be considered “reusable code” to the application. In [17] a system for automating testing of glue code is outlined, where the components to be connected (or glued) are modelled as Finite State Machines (FSMs). I am not convinced that this approach is a practical one, or that it will work well with Test Driven Development.

Ultimately, perhaps the best that can happen here is ensure that the glue is well separated from the application code. Thus, when building on a single API we will want an architecture like the one shown in Figure 1, and for multiple APIs one like the one in Figure 2.

Conclusion

Testing code that depends on a remote API is challenging. As such, it is even more important that applications like this are designed with testability in mind. Mock objects will need to be used extensively, which means interfaces are necessary and must be well designed. Dependencies need to be carefully considered and minimized in order to reduce the necessary mocking; the Law of Demeter [18] is particularly important.

Ultimately, whilst using a remote API has some particular challenges, following good design principles and testing practises can overcome most of them. The remaining obstacle is API limitations and the need for internet access. The first means considering rate limitations in our test cases and perhaps limiting how often our tests are run – i.e. running tests on every check-in rather than on every save. It also renders it necessary to work whilst connected to the internet (against much popular productivity advice); clearly this is not always possible, but in an increasingly connected world is becoming less and less of a problem.

Bibliography

[1] “Why are we embarrassed to admit that we don’t know how to write tests?” Available: http://misko.hevery.com/2009/07/07/why-are-we-embarrassed-to-admit-that-we-dont-know-how-to-write-tests/.

[2] “To “new” or not to “new”…” Available: http://misko.hevery.com/2008/09/30/to-new-or-not-to-new/.

[3] “How to Think About the “new” Operator with Respect to Unit Testing” Available: http://misko.hevery.com/2008/07/08/how-to-think-about-the-new-operator/.

[4] “hamcrest – Project Hosting on Google Code” Available: http://code.google.com/p/hamcrest/.

[5] “Tutorial – hamcrest – Project Hosting on Google Code” Available: http://code.google.com/p/hamcrest/wiki/Tutorial.

[6] K. Beck and C. Andres, Extreme Programming Explained: Embrace Change, Addison-Wesley Professional, 2004.

[7] “BBC NEWS | Technology | Web slows after Jackson’s death” Available: http://news.bbc.co.uk/2/hi/technology/8120324.stm.

[8] “Tr.im URL Shortener Shuts Down; Short Links to Die?” Available: http://mashable.com/2009/08/09/trim-shuts-down/.

[9] C.R. de Souza, D. Redmiles, L.T. Cheng, D. Millen, and J. Patterson, “How a good software practice thwarts collaboration: the multiple roles of APIs in software development,” ACM SIGSOFT Software Engineering Notes, vol. 29, 2004, pp. 221–230.

[10] “Software Testing Categorization” Available: http://misko.hevery.com/2009/07/14/software-testing-categorization/.

[11] “ProgrammableWeb – Mashups, APIs, and the Web as Platform” Available: http://www.programmableweb.com/.

[12] L. Grammel and M.A. Storey, “An end user perspective on mashup makers,” University of Victoria Technical Report DCS-324-IR, 2008.

[13] “Interfacing with hard-to-test third-party code” Available: http://misko.hevery.com/2009/01/04/interfacing-with-hard-to-test-third-party-code/.

[14] E. Gamma, R. Helm, R. Johnson, and J.M. Vlissides, Design Patterns: Elements of Reusable Object-Oriented Software, Addison-Wesley Professional, 1994.

[15] “Mocking in Java: jMock vs. EasyMock” Available: http://jeantessier.com/SoftwareEngineering/Mocking.html.

[16] A. Bertolino, “Software testing research: Achievements, challenges, dreams,” 2007 Future of Software Engineering, 2007, pp. 85–103.

[17] R. Gotzhein and F. Khendek, “Compositional Testing of Communication Systems,” Testing of communicating systems: 18th IFIP TC 6/WG 6.1 International Conference, TestCom 2006, New York, NY, USA, May 16-18, 2006: proceedings, 2006, p. 227.

[18] “Law of Demeter – Wikipedia, the free encyclopedia” Available: http://en.wikipedia.org/wiki/Law_of_Demeter.

2 replies on “Testing Code that Depends on Remote APIs”

The theme and the fonts used in this blog is extremely neat.

[…] Testing Code that Depends on Remote APIs. A technical post from 2010 outlining the difficulties of testing code depending on external services and how to work around them. […]